Gaussian Processes

Gaussian Processes

Definition. A Gaussian process is random function $f : X \to \R$ such that for any $x_1,..,x_n$, the vector $f(x_1),..,f(x_n)$ is multivariate Gaussian.

Every GP is characterized by a mean $\mu(\.)$ and a kernel $k(\.,\.)$. We have $$ \htmlClass{fragment}{ f(\v{x}) \~ \f{N}(\v{\mu}_{\v{x}},\m{K}_{\v{x}\v{x}}) } $$ where $\v\mu_{\v{x}} = \mu(\v{x})$ and $\m{K}_{\v{x}\v{x}'} = k(\v{x},\v{x}')$.

Bayesian learning: $f \given \v{y}$.

Bayesian Optimization

Goal: minimize unknown function $\phi$ in as few evaluations as possible.

- Build GP posterior $f \given \v{y}$ using data $(x_1,\phi(x_1)),..,(x_n,\phi(x_n))$.

- Choose $$ \htmlClass{fragment}{ x_{n+1} = \argmax_{x\in\c{X}} \alpha_{f\given\v{y}}(x) } $$ to maximize the acquisition function $\alpha_{f\given\v{y}}$, for instance expected improvement $\alpha_{f\given\v{y}} = \E_{f\given\v{y}} \max(0, {\displaystyle\min_{i=1,..,n}} \phi(x_n) - f(x))$.

Automatic explore-exploit tradeoff.

Bayesian Optimization

Modeling Dynamical Systems with Uncertainty

$$ \htmlData{fragment-index=0,class=fragment}{ x_0 } \qquad \htmlData{fragment-index=1,class=fragment}{ x_1 = x_0 + f(x_0)\Delta t } \qquad \htmlData{fragment-index=2,class=fragment}{ x_2 = x_1 + f(x_1)\Delta t } \qquad \htmlData{fragment-index=3,class=fragment}{ .. } $$

Model-based Reinforcement Learning

Geometry-aware Gaussian Processes

Matérn Gaussian Processes

$$

\htmlData{class=fragment fade-out,fragment-index=9}{

\footnotesize

\mathclap{

k_\nu(x,x') = \sigma^2 \frac{2^{1-\nu}}{\Gamma(\nu)} \del{\sqrt{2\nu} \frac{\norm{x-x'}}{\kappa}}^\nu K_\nu \del{\sqrt{2\nu} \frac{\norm{x-x'}}{\kappa}}

}

}

\htmlData{class=fragment d-print-none,fragment-index=9}{

\footnotesize

\mathclap{

k_\infty(x,x') = \sigma^2 \exp\del{-\frac{\norm{x-x'}^2}{2\kappa^2}}

}

}

$$

$\sigma^2$: variance

$\kappa$: length scale

$\nu$: smoothness

$\nu\to\infty$: recovers squared exponential kernel

$\nu = 1/2$

$\nu = 3/2$

$\nu = 5/2$

$\nu = \infty$

Riemannian Geometry

How should Matérn kernels generalize to this setting?

Geodesics

$$ k_\infty^{(d_g)}(x,x') = \sigma^2\exp\del{-\frac{d_g(x,x')^2}{2\kappa^2}} $$

Theorem. (Feragen et al.) Let $M$ be a complete Riemannian manifold without boundary. If $k_\infty^{(d_g)}$ is positive semi-definite for all $\kappa$, then $M$ is isometric to a Euclidean space.

Need a different candidate generalization

Stochastic Partial Differential Equations

$$ \htmlData{class=fragment,fragment-index=0}{ \underset{\t{Matérn}}{\undergroup{\del{\frac{2\nu}{\kappa^2} - \Delta}^{\frac{\nu}{2}+\frac{d}{4}} f = \c{W}}} } \qquad \htmlData{class=fragment,fragment-index=1}{ \underset{\t{squared exponential}}{\undergroup{\vphantom{\del{\frac{2\nu}{\kappa^2} - \Delta}^{\frac{\nu}{2}+\frac{d}{4}}} e^{-\frac{\kappa^2}{4}\Delta} f = \c{W}}} } $$ $\Delta$: Laplacian $\c{W}$: (rescaled) white noise

Generalizes well to the Riemannian setting

Riemannian Matérn Kernels: compact spaces

$$ k_\nu(x,x') = \frac{\sigma^2}{C_\nu} \sum_{n=0}^\infty \del{\frac{2\nu}{\kappa^2} - \lambda_n}^{-\nu-\frac{d}{2}} f_n(x) f_n(x') $$

$\lambda_n, f_n$: Laplace–Beltrami eigenpairs

Analytic expressions for circle, sphere, ..

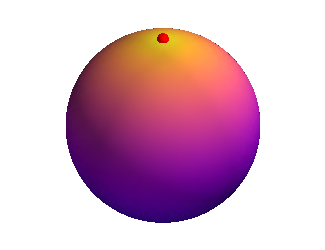

Riemannian Matérn Kernels

$k_{1/2}(\htmlStyle{color:rgb(255, 19, 0)!important}{\bullet},\.)$

Example: regression on the surface of a dragon

(a) Ground truth

(b) Posterior mean

(c) Standard deviation

(d) Posterior sample

Applications

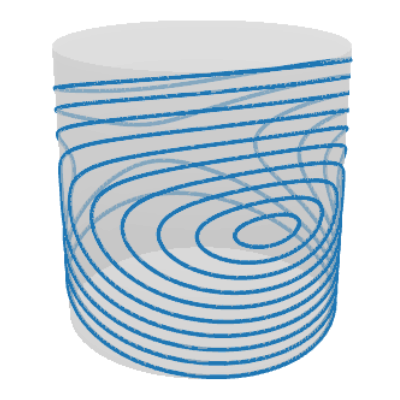

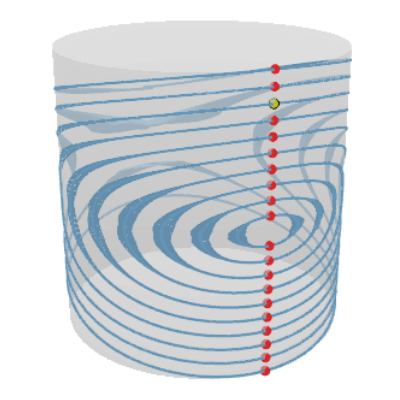

Pendulum Dynamics: GP on a cylinder

(a) Ground truth

(b) Gaussian process

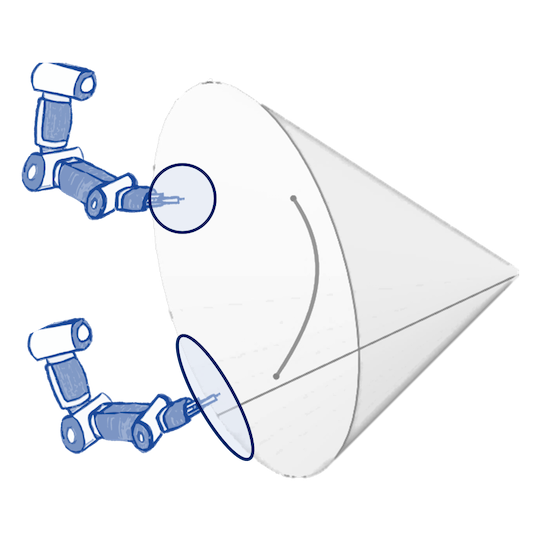

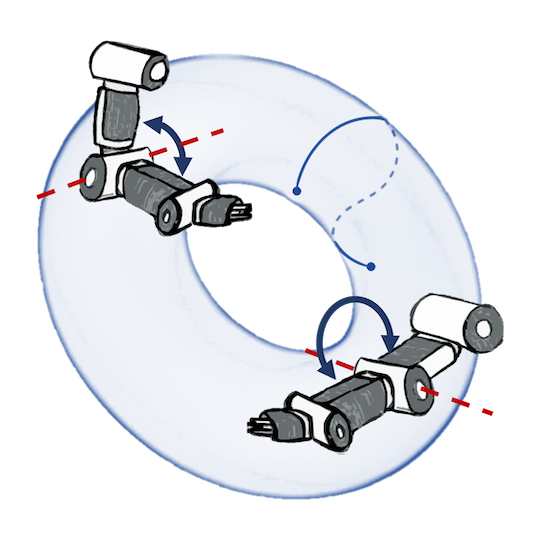

Bayesian Optimization in Robotics

Orientation: sphere

Manipulability:

SPD manifold

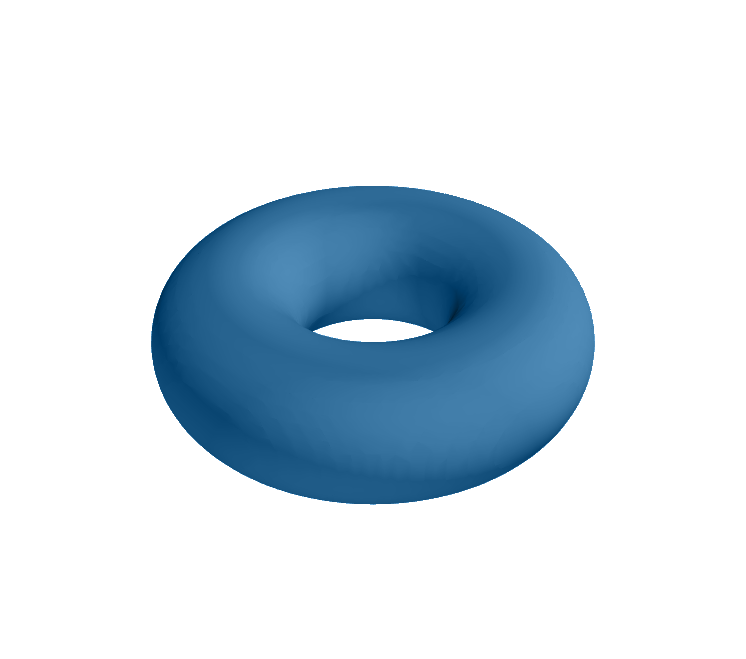

Joint postures: torus

Matérn Gaussian Processes

on Riemannian Manifolds

Viacheslav*

Borovitskiy

Alexander*

Terenin

Peter*

Mostowsky

Marc Peter

Deisenroth

*equal contribution

Matérn Gaussian Processes on Graphs

Viacheslav*

Borovitskiy

Iskander*

Azangulov

Alexander*

Terenin

Peter*

Mostowsky

Marc Peter

Deisenroth

Nicolas

Durrande

*equal contribution

Thank you!

Thank you!

V. Borovitskiy, I. Azangulov, A. Terenin, P. Mostowsky, M. P. Deisenroth. Matérn Gaussian Processes on Graphs. Artificial Intelligence and Statistics, 2021.

V. Borovitskiy, A. Terenin, P. Mostowsky, M. P. Deisenroth. Matérn Gaussian Processes on Riemannian Manifolds. Advances in Neural Information Processing Systems, 2020.